Building My AI Engine

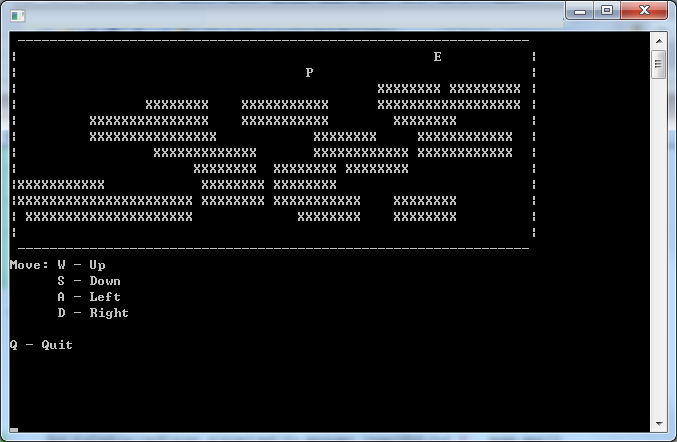

These days, I've been turning my attention towards AI. In the past, I spent so much time working on graphics components of the engine in the past, that I've never put the effort into building a good AI system. When I was developing Genesis SEED, I wrote a complex AI system, but it was too unwieldy and complicated so I decided to start from scratch when working on the AI system that would go into Auxnet. It’s still in its beginning stages but in the long term, I’d like to release the AI system as an open source project. I want to build a general AI framework that will work on many different game types. How and why do I want to make a general AI framework? I want to build this game engine in a modular way to allow for future expansion. To achieve this modular approach, the code will naturally take a framework with attached plug-ins design. I know that AI is very game specific, but what I want to do is provide a collection of techniques that the AI agents can have to complete ...